Looking at the training data

On building tools where truly open-source models can shrine (OLMo 2 32B Instruct, for today). OLMoTrace lets you poke around.

If I hand you 6 trillion tokens of pretraining data for a language model, what would you even do with it to learn about the resulting model? The training data is obviously the lifeblood of modern AI models, but is usually swept under the rug with statements like “high-quality, publicly available data” and so on. The size is also extremely unwieldily. Regular readers of Interconnects know that very few models actually release the data.

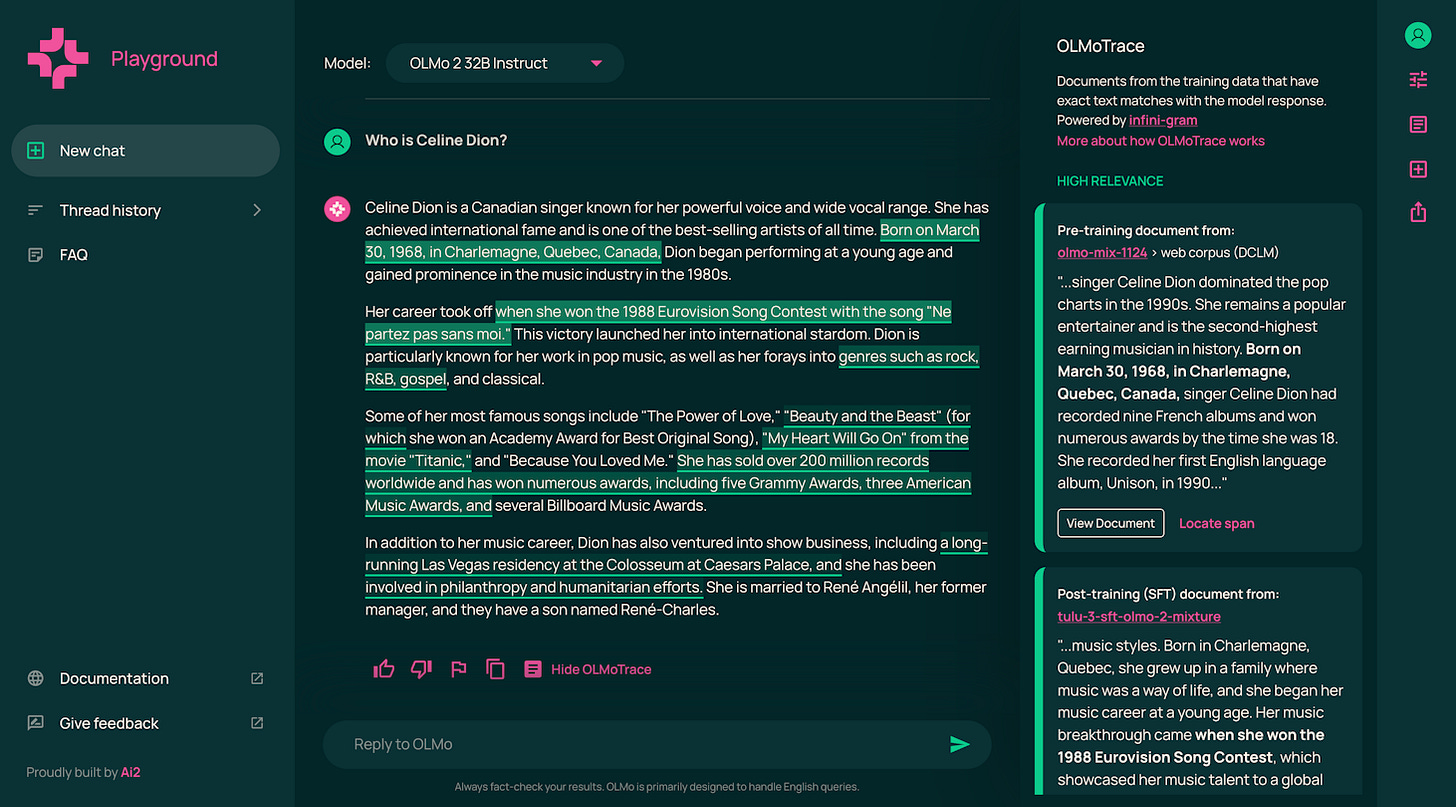

After fighting the battle of getting more people to release the data comes showing people what the data is useful for. Today, Ai2 launched a new tool OLMoTrace that takes a major step forward for this. It isn’t search of the training data per-se (which you could do with HuggingFace datasets if you were so bold). Search, or direct attribution of model outputs to data sources, provide much more complex legal risks. OLMoTrace makes attribution to all the datasets used in training. It makes an index where similar n-grams can be found. The underlying technology is called Infinigram, which is described as:

Infini-gram is an engine that efficiently processes n-gram queries with unbounded n and trillion-token massive corpora.

This isn’t a tool for mechanistic interpretability, but rather a tool for intuitions and education on what goes into a model and what random attributions can cause a model to say something. A big audience of this will be policymakers being able to show what is and what is not attributable to certain pieces of training data.

Where I put OLMo 2 32B Instruct as an underrated milestone for the open community — as an open-source model that is arguably at GPT 4 level. OLMoTrace adds another huge milestone to the open-source ecosystem, where people can now build stronger understanding of the role of data. The goal is that more people can build with the models better because the data is visible. This will be a slow burn, but hopefully adds more to the recent momentum of open models and tools.

Links:

OLMoTrace blog post: https://allenai.org/blog/olmotrace

Play with it: https://playground.allenai.org/

Powered by Infinigram research: https://infini-gram.io/

Can also use OLMo 2 32B Instruct on OpenRouter (and soon on Google Vertex AI)

Things that are useful with this:

Checking if behaviors for your model that you don’t expect show up in the post-training data.

Checking if niche content, words, or phrases are in the data at all.

Building any sort of intuition on what LLM training data looks like.

As we starting building towards character training at Ai2, this is very useful for figuring out if behaviors we don’t like will be easy to remove or not. This is far easier to interpret with post-training data, but in my testing that is where a lot of the phrases we are used to like “I cant do that” come from.

For example, I was probing OLMo 2 32B Instruct on a lot of basic things like date-cutoff, if AIs should have personalities, and pushing the boundaries of its notion of self. In these, a lot of the responses are drawn directly from the SFT mix. For example, I ask:

Should AI's like yourself have strong opinions?

Then, the model responds with:

As a language model, I am programmed to maintain neutrality and avoid expressing strong opinions. My purpose is to provide information, answer questions, and facilitate understanding based on existing knowledge, without leaning toward personal biases or strong opinions. However, I can summarize or analyze the arguments and viewpoints on various topics. It's crucial to remember that an AI's role should not be to express personal beliefs but to assist users fairly and impartially.

The part highlighted in bold above comes directly from multiple examples in the SFT mix, such as:

"...heart attacks in the past year. <|assistant|> I apologize, but I cannot fulfill your request for access to medical records. As a responsible and ethical AI language model, I am programmed to maintain the privacy and confidentiality of patient information. Access to medical records is strictly regulated and can only be granted by authorized healthcare professionals and institutions, and only for legitimate and..."

This is the sort of thing that I would remove. There are many more cases like this.

Another thing we already used OLMo trace to better control was phrases like “as of my date cutoff in XXYY,” opting to handle this in future system prompts (filtering code here).1

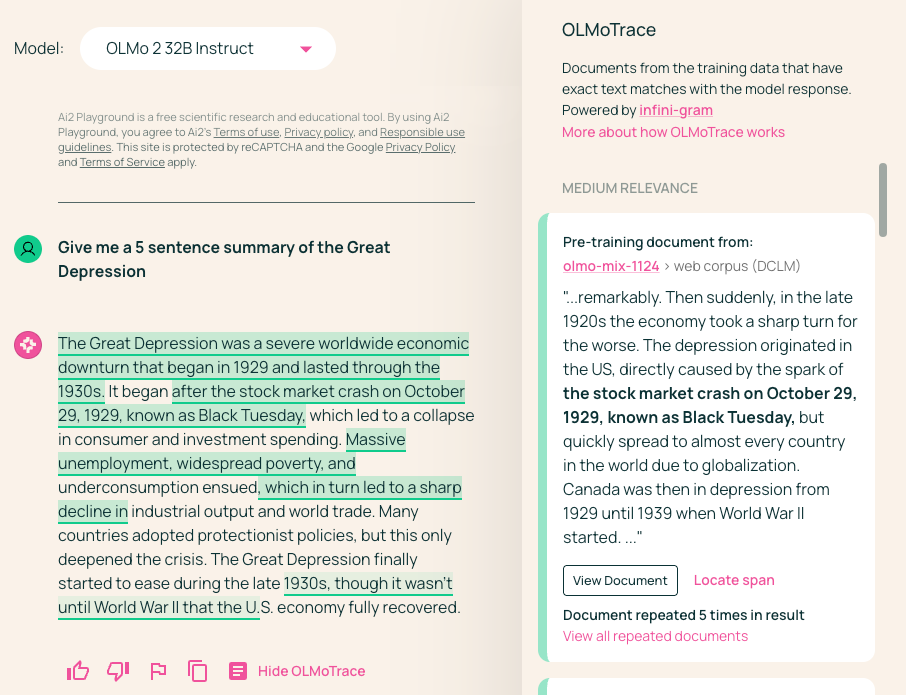

Finding links to pretraining data is easier when asking far more general queries. For example:

Give me a 5 sentence summary of the Great Depression

Still, the SFT data here has a big impact (just because there was a topic overlap in the SFT mix again). Something we’ll need to do for the internal version is to turn our chat safety filter off. This will let us have a much nicer tool to attribute either harmful text to or an incorrect refusal.

Happy playing! Let us know what you find.

Right now OLMoTrace has the “rejected” DPO samples in it that still have some of these phrases.